Just a few years ago, testing in marketing was not the norm. In 2013, Steve Ebin, head of growth at Optimizely, assumed that A/B tests were not effective for low-traffic websites, and so he didn’t run them. When they decided to change their pricing, however, he stumbled upon an insight in a customer interview.

The customer hated the language in their pricing page call to action. It said “Contact sales.” Through this anecdote, they decided to set up a test on the page. The control version had the original copy, and the new version said “Schedule demo.”

The new version won out, and won big. Optimizely observed a greater than 300% improvement in conversions.

Though this is a rare case, testing is a handy tool for every aspect of your marketing stack, including video. Successful tests usually result in incremental, rather than explosive growth. Often, tests don’t produce any useful results. But if you keep at it, you will see meaningful improvements.

To ensure consistency, you need to have a system in place. You need a way to test new marketing ideas strategically and analyze your findings diligently. You need to think like a scientist.

The scientific method for growth

More and more marketing is becoming a scientific discipline. With so much data and technology available, marketers can make landmark discoveries about the products they’re selling and the customers who buy those same products, if they just know where to look.

Like scientists, marketers can validate their ideas through testing. Brian Balfour, former VP of growth at HubSpot, came up with a growth model for marketers based on the scientific method.

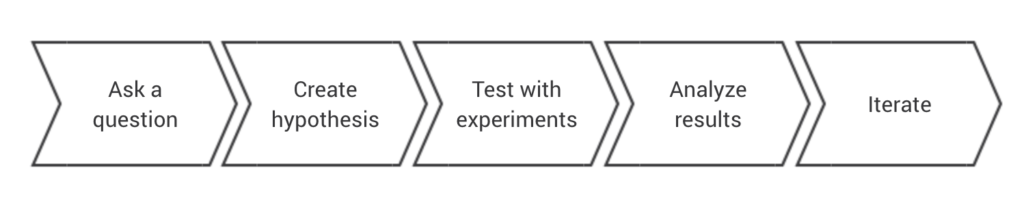

He boils the method down to five steps, much like the traditional scientific method from grade school:

- Define Your Goals

- Ask the Right Questions

- Develop Your Hypothesis

- Test & Analyze Your Ideas

- Refine, Reject, Repeat

Marketers can use this process to validate their ideas for new ad campaigns. A simplified version of Balfour’s process looks something like this:

In this process, the line of inquiry always ends in action, no matter how minor. We’ll discuss the steps as grouped into three phases:

- Phase 1: Ask a question and create a hypothesis – Start at high-level company goals and work with managers to determine what you need to discover in order to win for your company. Boil down one goal into a hypothesis that can be tested.

- Phase 2: Test with experiments – Split test ad content, try out different tools, start new channels, or segment audiences.

- Phase 3: Analyze results and iterate – See how the new variable performed against control metrics (leads generated or conversions from past content, emails, video, etc.) What could make this [content, email, video] better? Use what you’ve learned in your next experiment.

By subjecting new ideas to tests and experiments, you can create a more objective measure of success, and one that everyone on the team can contribute to. If the process disproves a claim, you can move on to the next idea quickly and confidently.

Each of these steps is an opportunity to gather knowledge and find ways to improve. The more times you repeat the process, the better you can predict customer behavior and replicate successful campaigns. Let’s walk through each phase.

Phase 1: Ask a question and create a hypothesis

Say you’re the head of marketing at a new e-commerce company that sells pillows. You’ve produced a short marketing video for your iPhone app and put it on Instagram. The clickthrough rate is high, but the conversion rate is really low—less than 1%. Management wants to see at least 3% conversion on video ads in the next month, or else they will divert resources to another marketing channel. You’re a video believer. How do you go about solving this problem?

First, start by turning the problem into a question that can be answered through testing. Make sure you have a specific goal in mind.

How can we improve the conversion rate from our video?

Then, take the problem to your team. Get as much input as possible and come up with a few hypotheses together.

- Hypothesis 1: The app is confusing. If we redesign the homepage, then people will know where to click to buy pillows.

- Hypothesis 2: Our customers are mostly women. If we eliminate all men from our audience, more potential customers will be exposed to the ad.

- Hypothesis 3: The embedded CTA is unclear. If we change it from “sleep better tonight” to “buy now” to match Facebook’s button, people will be primed to buy.

Hypothesis 1 is more product-related than marketing. It requires input from engineering and design and will take a lot of time and manpower to implement. While it’s always good to test homepages, this project is best saved for a later date.

Hypothesis 2 relates to segmentation, which is a valid testing area, but in this case, the issue is not with who’s seeing and clicking on the ad, but rather, bounce rates after clicking. So you’ll save the segment experimentation for later.

Hypothesis 3 is highly specific and easy to change in your video making software without any input from product. You go with this idea.

A good hypothesis should be something you can easily test in a day, week, or month. CTAs are really easy to iterate on and can result in big conversion wins. By making the question and hypothesis phase a collaborative process, you can make sure you’re pursuing as many angles as possible.

Phase 2: Test with experiments

Once you have your hypothesis nailed down, it’s time to conduct your test. Whether a test is successful or not depends on your ability to track and control variables.

Tests and their corresponding results only apply to the metrics and tools at hand. Facebook, for instance, has strict ad formats and Facebook Analytics measures engagement in certain ways. Not only that but customers behave differently on Facebook than on other platforms. To make video ads, you have to follow the rules. These restraints are ultimately helpful because it narrows your test’s focus so you only have a few variables to work with.

The simplest test you can run is an A/B, or split test. The control variable will be the one whose results you can predict, to establish a norm. In our pillow company example, the control (A) will be the first video ad. Here’s what we know about ad A:

- Is a 10-second video featuring a couple at their hectic jobs and then cutting to getting into bed and turning off the light

- Has the tagline, “You’ve got a big day tomorrow”

- Appears on mobile and desktop

- Has an embedded CTA that says “Sleep better tonight”

- Has a CTA button that says “buy now” at the bottom right.

- runs for 1 week

The experimental variable (B) will keep everything the same, except for the embedded CTA. That CTA will say “Buy now.”

You can and should split test every aspect of an ad, as long as you do so systematically. Shakr has tons of video templates and ad formats for you to test, making it easy to swap content in and out. With lots of variables to work with, you can pursue your hypotheses about video ads without reaching out to a designer or producer.

Shakr’s easy video maker includes tons of opportunities to test and iterate.

You can test any number of small or large variables, such as:

- shape of the frame

- length of the video

- transition types

- color scheme

- typeface

- the level of energy conveyed

- quality of the video

- which stock models you use

As long as you have the budget, you can conduct all of these tests simultaneously. However, each test should only have one experimental variable, otherwise, the results will be compromised.

By getting in the habit of testing everything, you can optimize your marketing stack in your sleep. While you’re setting up one test, the results of others will be rolling in.

Phase 3: Analyze results and iterate

Opening your results the day after a test is complete can be like getting your final exam grades back. It’s nerve-wracking and exciting. Since you’ve followed the process it should be easy to determine the results of your experimentation. But unlike final exams, your marketing tests are never truly “complete.” There are always opportunities to score higher.

In our example, let’s say the hypothesis turned out to be correct. “Buy more” converted at 3.2%, where “Sleep better tonight” stayed at less than 1%. To get the most value out of your test, consider the following questions:

- What can you replicate?

- What should you change?

- What are other possible reasons for this result?

The answers to these questions will provide the insights you can turn into action that you will feed back into your testing machine. Since B was the decisive winner here, you can plan to keep that CTA for the next iteration. But maybe you also want to slow the video down, make it vertical, or try it in black and white.

Like the hypothesis stage, the analysis can be a team endeavor. Sharing your findings will help others fill in the gaps in their knowledge and will also subject you to critiques and challenges.

If you keep a record of every test you run, you can avoid making the same mistakes over and over again, and apply your compounding knowledge to the next campaign.

Discover your next big win with testing

Science is based on the admission of how little we know. Widely accepted scientific theories such as evolution or relativity are only viewed as the best possible answer to the initial inquiry. Same goes with marketing. When you nail the perfect ad, there are still endless other possibilities and opportunities for discovery.

A well-maintained and well-tended test machine ensures your team is always learning. By working together to solve problems, your marketing team will not only make incremental improvements, but set yourselves up for big breakthroughs.